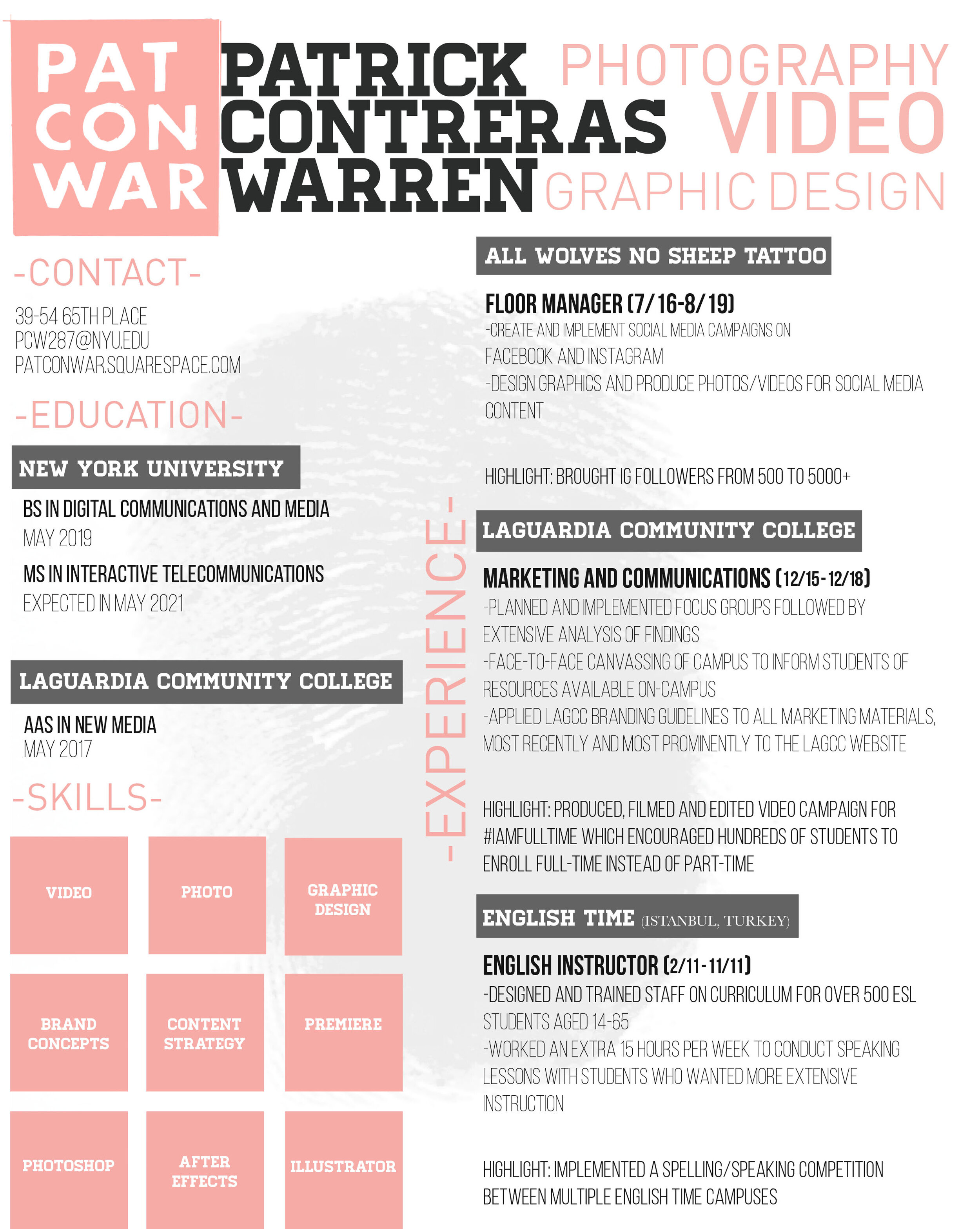

In the sketch above, there are two copper boards placed next to a computer keyboard and they are connected to an Arudino behind the monitor. The idea of the “Stress Manager” would come from an article that suggests that taking time to do nothing can help you get more done. In this scenario, the corporation decides that they can spare a minute every hour, but instead of giving a whole minute at once, and risking a break in productivity, they decide that 10 seconds every 10 minutes would be ideal for their bottom line. With “The Stress Manager”, an employee in the middle of work, would have their screen filled with the word “STRESS” with a bright red background. Their keyboard and mouse would also deactivate while the word is on the screen. The only way to remove the word is to place both hands on the copper plates and hold them there as the red fades to white and the word disappears.

The inspiration for the visualization of this piece came from an episode of Radio Lab called “Sex-Ed”. The following snippet explains a woman dealing with pain from cramps.

======================

MOLLY: In that moment, Sindha became 11 years old again. It was her first period, and the pain was terrible.

SINDHA AGHA: I was laying in bed. My mom couldn't be there. And she was, you know, almost always there but she had to be at work and I was having really bad cramps. So it was my dad and my uncle leaning over me trying to help me, and I was just, like, mortified but I was in too much pain to, like, really worry about it. And my dad turned on Gregorian chants and he burned some incense and he started waving it over my head and he was saying, like, "Just track the smoke with your eyes and just follow it. Follow it. Okay. Imagine you are the smoke and you're just floating." And I was really committing to this. I was like, "Okay, I'm the smoke!" And then he was like, "Okay, close your eyes. Imagine a color. What color you seeing?" I was like, "Red." Obviously, because I was on my period.

MOLLY: Sindha realized in that moment she could actually see the pain. It was a thing that had a shape to it that she could identify.

SINDHA AGHA: And then he's like, "Okay, and now try to change it into a different color with your brain." And I was like, "I guess blue."

MOLLY: Sindha found herself thinking back to that moment, and once again talking to her dad and trying to transform the color of the pain.

SINDHA AGHA: I remember standing on the little staircase that leads up to the plane, and I was, like, gripping onto the bar trying not to fall over and, like, gritting my teeth, just like clenching my jaw. And I was just like, "Okay, the color red. Okay, I see it. Yeah, I see it. All right. Okay, come on. Turn into something else. Pink maybe. Okay. All right, whew!"

======================

Reflection:

It’s strange how I’ve designed this assignment from a pretty toxic point of view, where the base is an exploitation of self-care techniques. Even knowing all of that and going into the experience with that knowledge, there was still some peace and enjoyment to be found in the experience. I could actually picture myself enjoying this in a workspace, even with the micromanaged time schedule of it. I still think it’s a gross abuse of power, but maybe that’s the point; These corporations could serve give you seconds of peace, and even though you deserve hours of it, you’ll take the seconds because you still enjoy peace. The corporation gets to look like they care and somehow you feel indebted to them.

Link to p5 sketch (only works with “The Stress Manager” attached)